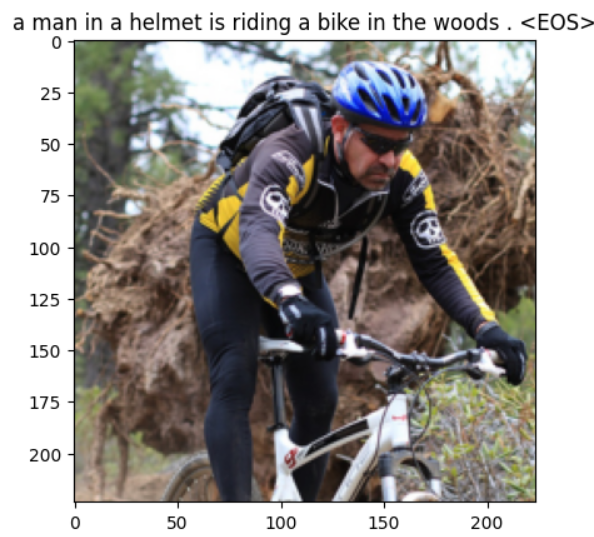

Advanced Image Caption Generation using Attention Mechanism

This project intricately involves an interaction between computer vision and natural language processing, leveraging advanced neural network architectures.

Project Overview:

- The challenge focuses on refining the encoder-decoder architecture, where Convolutional Neural Networks (CNNs) play a pivotal role in extracting salient features from images. These features are then passed to Recurrent Neural Networks (RNNs), especially Long Short-Term Memory (LSTM) networks, orchestrating the generation of captions.

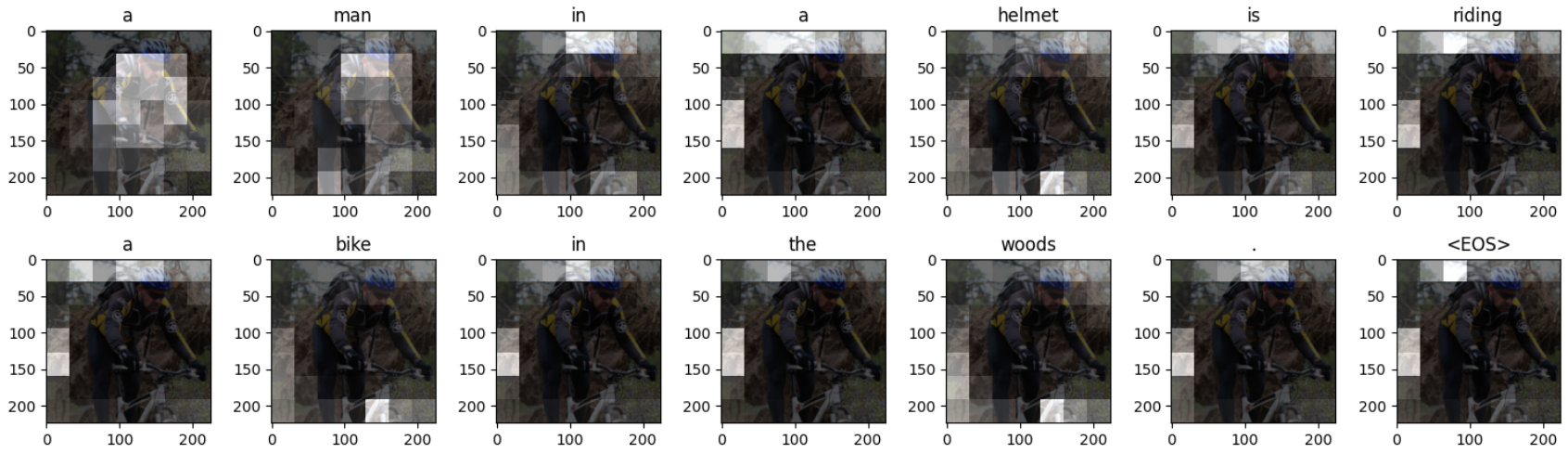

- The integration of attention mechanisms is a groundbreaking contribution, enabling dynamic focus on different areas of an image during caption generation, thereby improving the quality and relevance of descriptions.

Attention mechanism allows for greater accuracy and significance of captions as shown by the high BLEU score (50-55) that was constantly recorded during the process of evaluation.